Deploying applications to the Docker/NGINX server using GitLab CI

In a previous post, I talked about

setting up a Docker and NGINX-based server for running Docker-based web sites and applications. Now, I want to show

my process for continuously deploying my apps with a single git push, leveraging the power of GitLab CI.

General principle

The full process looks something like this:

We are using a standard private GitLab project to host the source code, and push changes there from the local machine via Git. From there, the GitLab CI pipeline takes over, and performs the build and deploy automatically.

GitLab provides a Docker registry for every hosted project, with provisions for the CI jobs to push and pull built container images using automatically provided credentials.

The pipeline for each project is described in the gitlab-ci.yml file in the root of the repository. It uses two execution

environments (runners) to perform the tasks (jobs):

- The shared runner is provided by GitLab free of charge (with a free usage limit), and does the heavy lifting work of building and

containerizing the project, and pushing the built images to the registry. These runners use the

dockerexecutor, meaning they pull up a fresh Docker image and run each job inside it, fully isolated and torn down after the job is done. This allows us to specify which image is used for our jobs, making it easy to have the necessary build environment for whatever project is being built. - A private runner on our VPS is used to pull the built container images and run them using

docker-compose. It runs using theshellexecutor, and its user is part of thedockergroup, allowing it to interact with the local Docker daemon as necessary. It is marked with a tag to make sure it only executes thedeployjobs.

VPS setup

Make sure the following is installed on the VPS:

Docker including docker-compose is needed to pull and run application containers. Should be in place already, if you came here from the previous post.

Gitlab runner to let GitLab execute the deploy jobs on the VPS: install and register.

When installing and first configuring the Gitlab Runner on the VPS:

Use the

shellexecutor.Add the

gitlab-runneruser to thedockeruser group so that it has the necessary privileges to manage Docker containers:sudo usermod -aG docker gitlab-runnerAdd the

vpstag to mark the runner and differentiate it from GitLab shared runners.

Don’t forget to enable your runner and GitLab shared runners for every project you want to use it for.

Examples

Static content (single container)

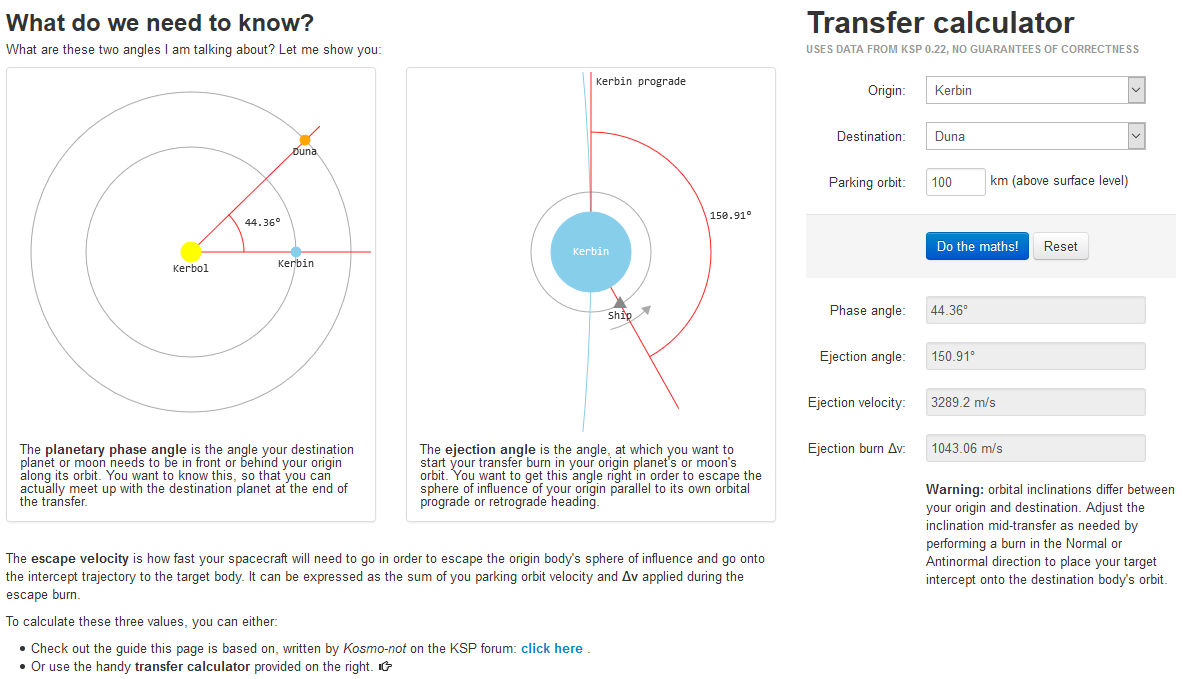

The most basic example is a completely static site with hand-written HTML/CSS/JS, with no compilation steps involved. I just happen to still run such a site: ksp.olex.biz - a tiny tool for Kerbal Space Program that explains the basic concepts behind interplanetary orbital flight, and offers a calculator for determining the exact parameters of an engine firing needed to reach another planet or moon in the game. I built this back in 2012-13, when I was still a moderator and pre-release tester for KSP, and when the first version of the game featuring more than just the home planet and its two moons (Kerbin, Mun and Minmus) was released and interplanetary flight became a thing.

This simple site, of course, runs in a single container. I use a tiny nginx:alpine image to serve the static files

from NGINX’s default server directory, with no extra configuration of any kind. The Dockerfile is about as simple as they get:

FROM nginx:alpine

COPY . /usr/share/nginx/html

EXPOSE 80

The GitLab CI configuration that builds and deploys the container is also fairly straightforward:

stages:

- build

- deploy

build_docker:

stage: build

tags:

- docker

image: docker:latest

services:

- docker:dind

script:

- docker build -t $CI_REGISTRY_IMAGE:latest .

- docker tag $CI_REGISTRY_IMAGE:latest $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

deploy_vps:

stage: deploy

tags:

- vps

script:

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker pull $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

- docker-compose down

- docker-compose up -d

The build_docker is the build job that runs on the shared runner. It builds the new image, tags it with the current branch/tag name, and pushes it into the GitLab registry for the project using CI-provided credentials to log in. The deploy_vps job then executes on the private runner on the VPS, pulls the image and uses docker-compose to stop any already running containers from previous deployments, and pull up the current version.

The docker-compose.yml for that is as follows:

version: '3'

services:

ksp.olex.biz:

image: registry.gitlab.com/olexs/ksp.olex.biz:master

expose:

- "80"

environment:

VIRTUAL_HOST: ksp.olex.biz

VIRTUAL_PORT: 80

LETSENCRYPT_HOST: ksp.olex.biz

LETSENCRYPT_EMAIL: email@somewhere.tld

restart: unless-stopped

networks:

- proxy

networks:

proxy:

external:

name: nginx-proxy

This uses the freshly-pulled image from my GitLab project registry and configures the container with the necessary environment variables for the reverse proxy to pick it up and make it available to the world complete with SSL and auto-provisioned LetsEncrypt certificate, as described in the previous post.

Web app with a build system

Most web sites and apps use more than just manually edited static files. A good example is this very blog you’re now reading: it’s built

using Jekyll, and uses a Ruby-based environment to generate the static website from Markdown-based sources that I write. This is the .gitlab-ci.yml

file that controls the process:

stages:

- build

- dockerize

- deploy

build_jekyll:

stage: build

tags:

- docker

image: ruby

cache:

paths:

- vendor/bundle

script:

- gem install bundler:2.0.2

- bundle install --path vendor/bundle

- bundle exec jekyll build -s . -d ./_site

artifacts:

paths:

- _site

build_docker:

stage: dockerize

tags:

- docker

image: docker:latest

services:

- docker:dind

script:

- docker build -t $CI_REGISTRY_IMAGE:latest .

- docker tag $CI_REGISTRY_IMAGE:latest $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

deploy_vps:

stage: deploy

dependencies: []

tags:

- vps

script:

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker pull $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

- docker-compose down

- docker-compose up -d

Comparing to the simple setup in the above example, this pipeline contains one additional job: build_jekyll. It runs on the shared runner

using a ruby base image. I use Ruby Bundler to install all necessary dependencies for the build process (including Jekyll itself and a

bunch of plugins), and keep the vendor/bundle path where they are installed in GitLab CI cache to avoid lengthy reinstall times on every

run of the pipeline.

The build_jekyll job builds the blog and puts the generated static content into _site, and the folder is packed up and returned to

GitLab CI as a job artifact. In GitLab CI, jobs from later stages will always download all artifacts from the previous stages - using this,

the content build in the first job is available for containerization in the build_docker job. The Dockerfile is almost identical to the

previous example:

FROM nginx:alpine

COPY _site /usr/share/nginx/html

EXPOSE 80

And finally, the deploy_vps job runs on the VPS and deploys the NGINX container with the compiled site exactly like in the first example.

The docker-compose.yml file is identical, save for the image and virtual host names.

Multi-container app with a backend

A more complex app might have a separate backend and frontend, that need to run as multiple containers on the server. This can also be managed with the system I’m describing. Consider this example for an app with the following components:

- a Node.js-based backend exposing an API, built using

yarn - a single-page frontend built using a typical JS framework (Vue.js, React with

create-react-appor similar) - a persistent Docker volume for data storage

.gitlab-ci.yml

stages:

- build

- dockerize

- deploy

build-frontend-yarn:

tags:

- docker

stage: build

image: node:latest

cache:

paths:

- frontend/node_modules/

script:

- cd frontend

- yarn install

- yarn test

- yarn build

artifacts:

paths:

- frontend/dist

build-backend-yarn:

tags:

- docker

stage: build

image: node:latest

cache:

paths:

- backend/node_modules/

script:

- cd backend

- yarn install

- yarn test

build-frontend-docker:

tags:

- docker

stage: dockerize

image: docker:latest

services:

- docker:dind

dependencies:

- build-frontend-yarn

script:

- cd frontend

- docker build -t $CI_REGISTRY_IMAGE/frontend:latest .

- docker tag $CI_REGISTRY_IMAGE/frontend:latest $CI_REGISTRY_IMAGE/frontend:$CI_COMMIT_REF_NAME

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker push $CI_REGISTRY_IMAGE/frontend:$CI_COMMIT_REF_NAME

build-backend-docker:

tags:

- docker

stage: dockerize

image: docker:latest

services:

- docker:dind

dependencies: []

script:

- cd backend

- docker build -t $CI_REGISTRY_IMAGE/backend:latest .

- docker tag $CI_REGISTRY_IMAGE/backend:latest $CI_REGISTRY_IMAGE/backend:$CI_COMMIT_REF_NAME

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker push $CI_REGISTRY_IMAGE/backend:$CI_COMMIT_REF_NAME

deploy-vps:

stage: deploy

tags:

- vps

dependencies: []

script:

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN $CI_REGISTRY

- docker pull $CI_REGISTRY_IMAGE/frontend:$CI_COMMIT_REF_NAME

- docker pull $CI_REGISTRY_IMAGE/backend:$CI_COMMIT_REF_NAME

- docker-compose down

- docker-compose up -d

There are two separate jobs each in the build an docker stages. Both build jobs install dependencies (using a cache for the node_modules folder)

and run tests. Frontend job runs yarn build to produce the production build of the frontend and packages there as artifacts, minified and ready to be

used in a browser. Backend is run directly from source using node, so no build is necessary there.

Since we’re using GitLab’s shared runners for the build an docker stages, the jobs for backend and frontend can run in parallel on separate runner

instances.

docker jobs are identical for both backend and frontend. The difference is in the naming of the built images. GitLab Docker registry allows multiple

images to be built and stored for any project, as long as their tags start with $CI_REGISTRY_IMAGE and follow up with up to 2 hierarchical name levels:

registry.gitlab.com/<user>/<project>[/<optional level 1>[/<optional level 2>]].

frontend/Dockerfile

FROM nginx:alpine

COPY dist /usr/share/nginx/html

EXPOSE 80

Same story as above examples - take compiled static files, serve using NGINX.

backend/Dockerfile

FROM node:alpine

WORKDIR /app

COPY . .

RUN yarn install --prod

VOLUME [ "/app/data" ]

ENV NODE_ENV=production

EXPOSE 3000

CMD [ "node", "src/app.js" ]

This one is a a bit different. It uses a node image (I prefer alpine variants to save on image size), installs production dependencies and configures

Node to run in production mode, and then runs the backend app. It also defines a mounting point for a volume, where the app will store its data persistently.

docker-compose.yml

version: "3"

services:

awesome-app-frontend:

image: registry.gitlab.com/olexs/awesome-app.com/frontend:master

expose:

- "80"

environment:

- VIRTUAL_HOST=awesome-app.com

- VIRTUAL_PORT=80

- LETSENCRYPT_HOST=awesome-app.com

- LETSENCRYPT_EMAIL=email@somewhere.tld

restart: unless-stopped

networks:

- proxy

awesome-app-backend:

image: registry.gitlab.com/olexs/awesome-app.com/backend:master

expose:

- "3000"

environment:

- VIRTUAL_HOST=api.awesome-app.com

- VIRTUAL_PORT=3000

- LETSENCRYPT_HOST=api.awesome-app.com

- LETSENCRYPT_EMAIL=email@somewhere.tld

restart: unless-stopped

networks:

- proxy

volumes:

- data:/app/data

networks:

proxy:

external:

name: nginx-proxy

volumes:

data:

The compose file here has services configured for both the frontend and backend components of the awesome app. The backend service runs on

the api. subdomain of the main app, and again, the NGINX reverse proxy takes care of making both containers available online and properly

secured with Let’s Encrypt and a forced HTTPS redirect.

Because the data volume is defined in the docker-compose.yml, it is by default kept persistent on the VPS after the initial creation. This means

that when we redeploy our app after pushing some code changes, the app data stays even though the app containers themselves are destroyed, re-created

from new images and launched anew.

Of course you could add other containers to this setup, for example a non-Internet-facing backend or a database, as shown in the multi-container example in the previous post.

In conclusion

As with the previous post, I’ve been using the described setup for various side projects of mine for a while now, and that’s why I feel comfortable offering the recipe to others. It works nicely and saves a lot of manual work otherwise necessary to reliably and safely deploy an app, and keep re-deploying it as development progresses.

I hope this could help someone aside from myself. Hit me up on Twitter if you found this guide helpful, or if you have any comments at all.